DSTA 2019-20 Lab 6

SVM classification in sklearn

1 / 8

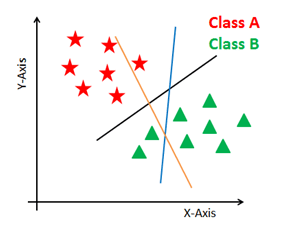

Support Vector Machines

Provide supervised classification to linearly-separable data

Seek a line, called cutting hyperplane that divides up the space.

Measure: distance from the closest point

Assumption: the cut is the level zero between one class and another.

(images courtesy of Datacamp)

2 / 8

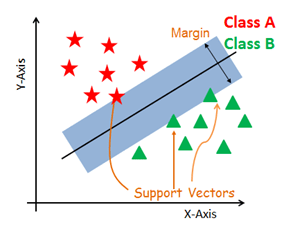

Support vectors are those closest to the border

they determine the geometry of the cutting hypherplane

the margin/bias is the min. distance to the border

Datapoints in space determine which cutting hyperplanes are valid

we will maximise the margin to the nearest points

3 / 8

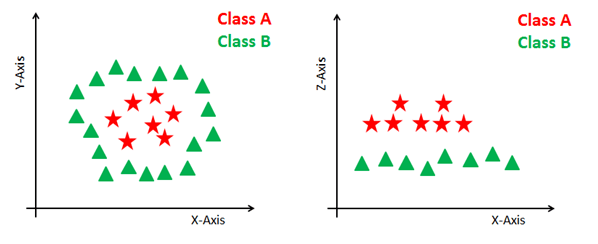

- Normalization leads margin to the unit.

- the Geometry of the solution is driven by data, details will be see in class

- thanks to kernalization we aim to solve non-linearly-separable instances

\phi(v) = (x, y, xy)$

4 / 8

from sklearn import datasetscancer = datasets.load_breast_cancer()cancer.data.shape5 / 8

from sklearn import datasetscancer = datasets.load_breast_cancer()cancer.data.shapefrom sklearn.model_selection import train_test_split# Split dataset into training set and test setX_train, X_test, y_train, y_test = train_test_split(cancer.data, cancer.target, test_size=0.3, random_state=109)6 / 8

from sklearn import datasetscancer = datasets.load_breast_cancer()cancer.data.shapefrom sklearn.model_selection import train_test_split# Split dataset into training set and test setX_train, X_test, y_train, y_test = train_test_split(cancer.data, cancer.target, test_size=0.3, random_state=109)from sklearn import svmclf = svm.SVC(kernel='linear') # Linear Kernelclf.fit(X_train, y_train)y_pred = clf.predict(X_test)7 / 8

from sklearn import datasetscancer = datasets.load_breast_cancer()cancer.data.shapefrom sklearn.model_selection import train_test_split# Split dataset into training set and test setX_train, X_test, y_train, y_test = train_test_split(cancer.data, cancer.target, test_size=0.3, random_state=109)from sklearn import svmclf = svm.SVC(kernel='linear') # Linear Kernelclf.fit(X_train, y_train)y_pred = clf.predict(X_test)from sklearn import metrics# Model Accuracy: how often is the classifier correct?print("Accuracy:",metrics.accuracy_score(y_test, y_pred))# Model Precision: what percentage of positive tuples are labeled as such?print("Precision:",metrics.precision_score(y_test, y_pred))# Model Recall: what percentage of positive tuples are labelled as such?print("Recall:",metrics.recall_score(y_test, y_pred))8 / 8